"Just ban the nazis," and the exasperation of sociotechnical platform politics

Eric Gilbert

feb 2019

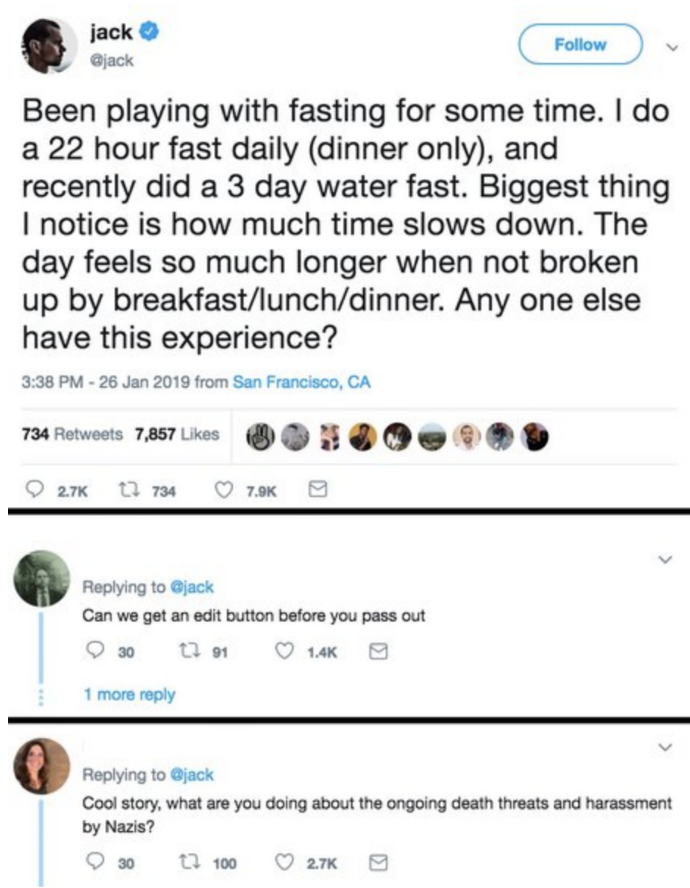

A constant refrain on Twitter and elsewhere, "just ban the nazis" and its variants appear in discussions around platform content decisions, but also on completely unrelated topics:

(@jack tweet about fasting, pointed out to me by Casey Fiesler)

I also want the nazis banned. (I am hopeful, in fact, that our work on Reddit’s ban of two hate groups led to the subsequent ban of Nazi groups on the site.) I find this emergent refrain interesting, however, for different reasons. It conveys both the ease with which most people can identify crucial deviant behavior ("I mean, they're nazis, come on!"), and the exasperation we feel because we can't do anything about it.1 It puts into stark relief our lack of agency in platform politics, as well as the sociotechnical institutions I think we deserve, but don't yet have.

People break rules and/or norms in online communities all the time.2 Some of those are highly contextual, highly dependent on a particular community's expectations. A garden-variety example: it would be a norm (and rule) violation to post an unlabeled spoiler on r/asoif—the subreddit for Game of Thrones and the associated books. In other cases, people violate what nearly everyone thinks constitutes acceptable social behavior: i.e., the nazis.

“just ban the nazis” highlights the need for two types of sociotechnical institutions we lack on modern social platforms: 1) sociotechnical systems for the creation and distribution of community-specific norms (where we can collectively decide “nazis=bad”), and 2) sociotechnical systems for enforcing violations of those norms (where we can collectively enforce “nazis=gone”).

Norm institutions

We need systems that allow community members to draft rules that govern social behavior online, reach consensus on those rules, and then make those rules visible to everyone in effective ways. Each part of this is hard.

In addition to supporting groups of various sizes and commitment levels, such a system would have to be able to live in the fractured, networked publics around which most modern social platforms are designed. Where exactly are the community boundaries on Twitter? Who gets to invent these normative guidelines? What is an acceptable level of agreement upon proposed rules? Finally, how do we make those rules visible to people as they participate in massive, fast-moving conversations online?

Enforcement institutions

Next, we need real power handed to groups and communities to take concrete action against an actor who violates established norms. These sanctions should have nuance: warnings, temporary community bans, permanent bans, votes to expel from the platform entirely, freezes on new followers, etc., in addition to content-specific sanctions (i.e., takedown orders for specific comments, threads, etc.). Report forms that go god-knows-where are insufficient. The code to carry out these sanctions should be immediately bound the authority of the groups entrusted to create the norms above.

However

I don't think Twitter (or any other contemporary social platform) will ever do this. The arrangements of technology and capital that created today's social platforms care about "community standards" insofar as they protect firms from legal liability and PR disasters.3,4 Someday we'll get something better.

1. The reasons why platforms choose not to act in general is complex and spans legal, financial, and political concerns. See Tarleton Gillespie's new book.

2. This of course happens offline too, and there is a rich history of sociological work on deviance exploring it.

3. "Community standards" groups notably lack any real role of the "community;" yes, those standards may arise from a set of focus groups, but the community has little to no political power to dictate that their expectations be codified into the platform's standards.

4. Or, in Tumblr’s case, (at least perceived) existential financial threat.